Welcome to the DataJoint Documentation¶

- DataJoint Python

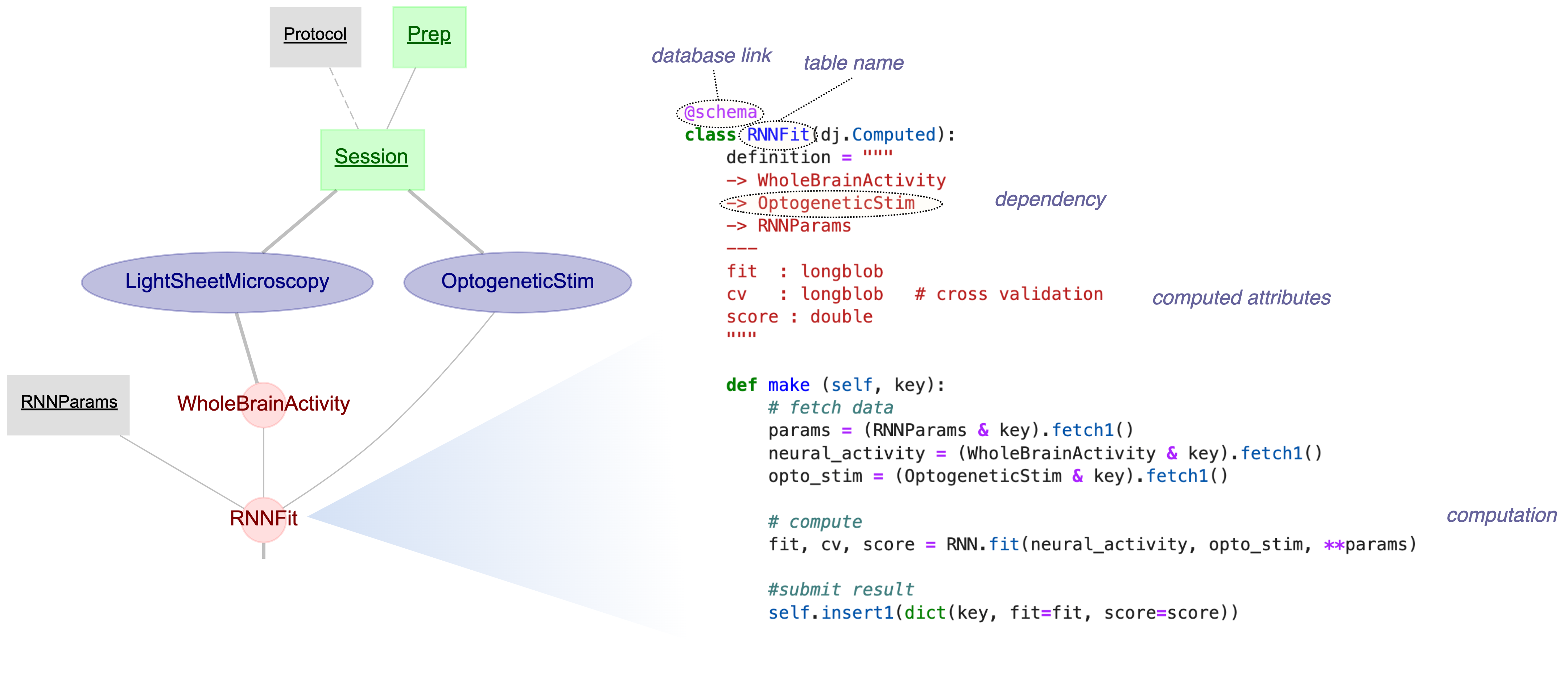

Open-source framework for defining, operating, and querying data pipelines

- DataJoint Elements

Open-source implementation of data pipelines for neuroscience studies

- DataJoint Works

A cloud platform for automated analysis workflows. It relies on DataJoint Python and DataJoint Elements.

- Project Showcase

Projects and research teams supported by DataJoint software